This blog is part 2 of a three-part article on maturity models. For more information, read part 1 and part 3.

Business alignment maturity

Business alignment maturity rests on the core business value foundation of optimizing profitable business growth. This can only be achieved by demonstrating both the effectiveness of security to reach business goals, and the efficiency of Security Operations in the pursuit of those goals. Although, Performance and Financial Maturity, and Communications Maturity can be seen as subordinate to Business Alignment Maturity, for the purpose of this article, they are addressed as separate topics.

Building Business Value

Having taken the first steps in establishing trust, security leaders should begin the journey to alignment by creating a simple mission statement demonstrating this alignment to the business. Short and direct is best. For example: The mission of Security Operations is to promote profitable business growth by reducing losses associated with cyber incidents, enhancing and optimizing the operational performance of the organization, and building and nurturing the trust that prospects, customers, partners, and employees have in our company.

To do this, we need to frame security projects in the context of these 3 points:

-

How does this initiative measurably reduce the potential for business loss?

-

How does this initiative measurably make work easier and more efficient? How can we show that employees will work easier, smoother, and more effectively?

-

How will this initiative build trust with our prospects, customers, employees, and partners?

Measurably reducing the potential for business loss

Measurably reducing the potential for business loss starts with ensuring that we align and prioritize controls to the risks of the business. This process builds a chain or mapping from Business Risk to specific actions in the security department. More importantly, it offers the business transparency and insight into why specific controls have been in place, and why specific actions have been taken by the security team.

In the past, it may have been sufficient for those in the information security profession to simply tell leadership that they understood the business – and aligned controls and processes to the organization’s business risk. However, I’ve met with hundreds of security organizations in the past twenty years and found that many of them didn’t fully understand the value chains within their company. Some were not aware of exactly how their company makes money, or even what the organization’s most critical assets are and where they reside.

Two real-world examples

This problem can be brought into sharp focus with two real-world examples. For instance, while consulting with a company that designs and manufactures complex industrial technology products, I asked the security operations leaders to identify the most critical and valuable intellectual property of the company. They unanimously agreed that the product specifications and manufacturing process documents were the most critical company IP.

I then asked where these documents were located. I was told that they were on highly secured servers in the company’s core data center. Finally, I inquired as to the location of the factories. They responded that there were seven manufacturing facilities located globally, but they were not sure of the security controls at those facilities. Clearly this is a security disconnect.

A factory can’t build and QA test a product without having the product specifications and the manufacturing process details. The core IP was spread across at least eight locations, and in seven of these locations the extent of the security controls was not known by those who have the responsibility for protecting the IP. Further, we must consider the transfer of that data, the frequency of the transfer, and the encryption limitations imposed by the governments of the countries in which the factories are located.

In a second example, the client was a company that derived over 50% of their revenue from maintenance and services of specialized equipment at commercial sites. The client was quick to agree that the detailed service and repair manuals were a critical part of the business. And, since the service technicians probably didn’t carry a steamer trunk of manuals with them, they were most likely accessed in a remote digital format. So, how was this protected? Where are the documents located? What authentication and encryption methods are used for remote access? What kind of endpoint security is deployed the technician platforms? How quickly do they disable access for terminated employees? Are the documents distributed via a CDN? Is the server susceptible to a DDoS attack?

The team was unsure of the specifics for this application or data type, but responded that since the security team secured the entire company and all related infrastructure and user endpoints, this issue was covered by default. Of course, most CISOs have already learned that the strategy of protecting all things equally is both wasteful and destined to fail.

Risk to action mapping

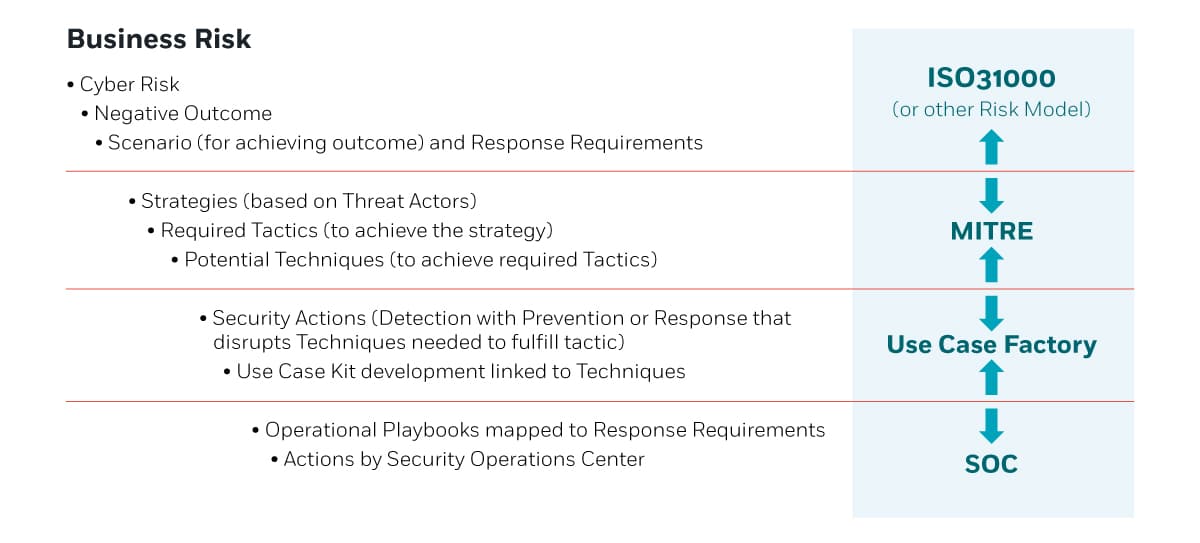

The bottom line here is that if Security Operations is not well versed in the organization’s business processes and data flows, they are not empowered to protect those processes or the data that serves as the life blood of those processes. The model and process presented in this figure below not only ensures that alignment by engaging the business, but creates artifacts that prove alignment and the value of the outcome:

Figure 1 Risk to action mapping

The process of aligning SOC development Use Case (UC) to the business is fundamental to value outcome. First, we need a working definition: a Use Case is a combination of detection mechanism, a planned response, and any automation or tooling required to achieve an effective response within the Response Window defined in the incident Impact Curve (described later in this article).

The process for deriving risk-based Use Cases typically involves a series of Scenario Workshops over the course of one to two weeks, depending on the complexity of the environment and how well the business leadership has defined their top risks. While a detailed description of the process is outside the scope of this article, Figure 1 depicts the general flow of that process and the associated resources.

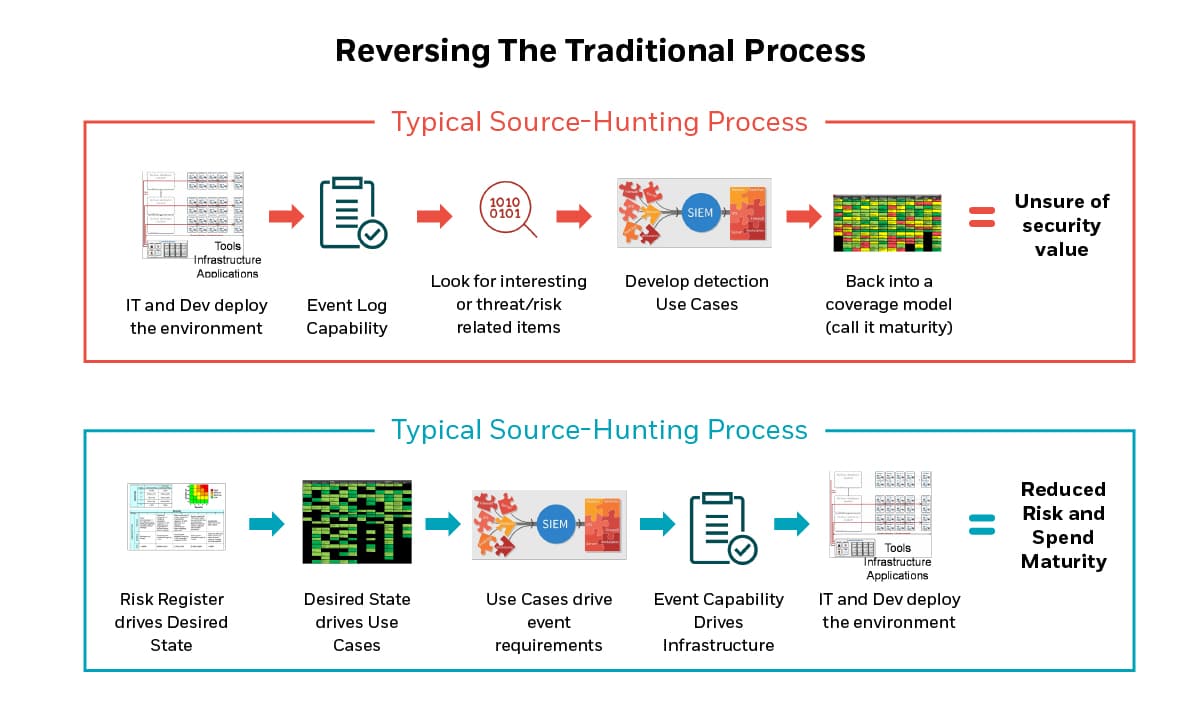

This top-down approach to Use Case (UC) development also requires rethinking the traditional approaches to log monitoring. The traditional approach to monitoring is to ingest all possible logs, and look for something interesting. Although this method does ensure an excellent repository of events for later audit, and provides a fertile data lake for AI/ML projects, it is also expensive. Further, if a log source or type is not actually used in a detection rule, then the ingestion and storage cost is wasteful. Most troubling, however, is that this method does not help us find with we’re looking for because the process didn’t include the critical first step of defining what we’re looking for.

The top-down approach on the other hand starts with scenarios that define the evidence trail that would be created during an undesirable event that we can use to create a “desired state” or detection target state for the techniques leveraged across all scenarios. From there, we can complete the UCs by adding the responses and automations required. Looking through the log sources to see if we’re collecting that evidence then becomes one of the last steps in the process, followed by log source re-tooling where gaps are identified.

This essentially reverses the traditional methodology, but ensures that the outcome reduces storage and ingestion costs, focuses on what’s most important, and ensures alignment to the needs of the business. Here is a depiction of this modified process flow.

Figure 2 Use Case development process

Obviously, this description is very high level, and the complete process is more complex and beyond the scope of this article.

While the deductive process (see Figure 1) provides a path from Risk to Action, Value reporting back to the business requires inductive reasoning and a probabilistic approach. Considering that a single technique may support many risk scenarios, security has the task of piecing together detected and mitigated techniques in an effort to map back to the most likely scenario and estimate an impact had the scenario progressed to conclusion without the intervention of Security Operations.

This has traditionally been reliant on deep expertise and experience in the organization’s SOC, but AI– based scenario recognition models can play an important role in this process and significantly reduce the effort. The following figure depicts the full journey from Risk to Value for this model.

Figure 3 Risk to value journey

The challenge of valuating loss

There are countless books on this topic, but I’ll offer some basic concepts that will help frame the process. First, we need to stop treating risk mitigation as binary. The truth is that the terms “always” and “never” have very limited applicability in the world of cyber security. Admittedly, mitigation solutions for a specific attack technique can in some cases be posited as present or not present with the assumption that when present, the technique prevention is 100 percent effective.

However, this is far from typical. And, when examining an end-to-end scenario involving numerous techniques and multiple potential kill-chain pathways, a solution with a binary outcome of 100 percent successful or 100 failure is frankly difficult to imagine.

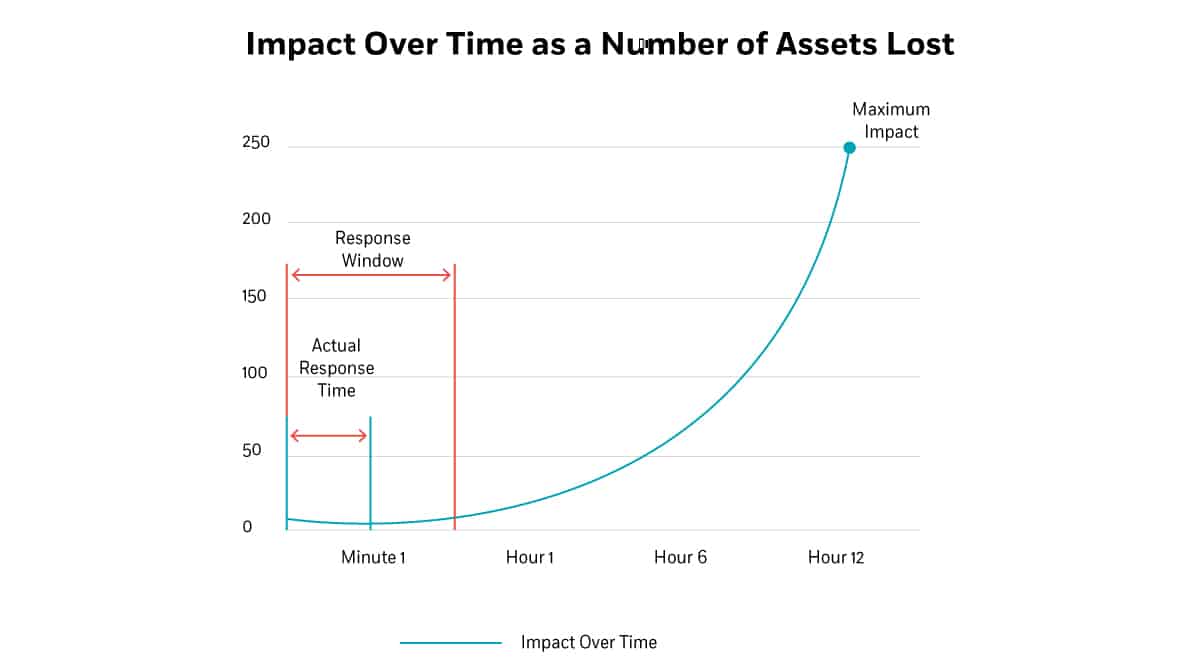

This is further complicated by the fact that incident impact is generally a function of time. In other words, when a mitigation is applied can have a profound effect on the efficacy of the mitigation and in the resulting impact of the attack.

With this in mind, consider the concept of the Impact Curve. This states that:

-

For every Attack Scenario or Cyber Loss Occurrence type, there is a unique Impact Curve that defines the amount of loss over time.

-

Maximum Impact is the maximum amount of loss potential associated with an Attack Scenario or Cyber Loss Occurrence type if no mitigation or response is applied.

-

The Response Windows represent the amount of loss for a specific Attack Scenario or Cyber Loss Occurrence type that is deemed the maximum acceptable loss by the organization. It may also be the point on the curve that represents a tipping point of diminishing returns in mitigation expenditure.

-

Target Response Time is the incident response time goal within which the specific Attack Scenario or Cyber Loss Occurrence type must be terminated or mitigated in order to ensure losses for the incident to remain below the maximum acceptable loss. This is also the metric for the efficacy of the solution.

This concept is depicted in the figure below using a self-replicating ransomware attack where all 250 target systems are vulnerable. Note that other attacks may have a nearly flat impact curve (such as a volumetric DDoS attack on an e-commerce site), or more of a hockey stick curve (for a long term exfiltration campaign):

Figure 4 Impact curve

Making work not just secure, but also easier and more efficient

Now let’s explore the next critical component of the mission statement to see how security can measurably make work easier and more efficient. This topic should begin by dispelling any belief that security can’t make things easier.

When you compare single-sign-on to logging into every application separately, it’s clear that a well-planned security control can indeed make the lives of employees easier. While I could list hundreds of possible business scenarios, I’ll focus on just a few in order to make the philosophical point of this component.

That is, to view every need of the business with the following questions: How can the security team make that happen in a secure and convenient way, and can security add more capability on top of the initial request to add value? It is incumbent on the Security department to look for creative ways to make business operations better, not just more secure. One request could be to support access to email and customer management applications for mobile sales people. Security could respond with providing a VPN for corporate laptops that supported remote access. Or, Security could offer a way to use any device, anywhere at any time, by creating a Trust Enabled Access architecture (topic of a different article). Another example could be the creation of a secure data repository. There could certainly be a premises data center approach over a secure VPN, with multiple logins for the VPN and application.

But what about a highly secure cloud storage solution enabled with Single Sign-on (SSO) and available natively on multiple platform types from anywhere? Again, it being creative in secure solutioning will position security as a business enabler.

Trust as the key to business

The final triad of Security value is Trust. Why is Trust so important? While it is true that cost and features are major customer considerations, Trust is the foremost factor that turns a prospect into a customer, and turns a onetime customer into a lifetime customer. Trust is a top consideration driving companies to form business partnerships. And, Trust is a leading factor in a company’s ability to attract and retain top talent.

Further, the Trust of customers, partners, and employees in an organization’s ability to protect and secure their private and sensitive data is of paramount importance. Therefore, Trust is fundamental to any business venture.

One of the best ways to win trust is through transparency. Organizations must be able to explain why they are trustworthy and how they are ensuring that trustworthiness. Even more critical than transparency is proof, and simply saying we’ve never had a reportable breach is not proof.

For companies that have had a public breach, the burden of proof is particularly challenging. The best proof of trustworthiness is through a comprehensive Testing, Certification, and Audit Program that demonstrates the effectiveness, consistency, and reliability of the organization’s Security and Privacy Controls Framework. Note that this is not a destination, but instead a continuous journey of improvement and validation.

This blog is part 2 of a three-part article on maturity models. For more information, read part 1 and part 3.